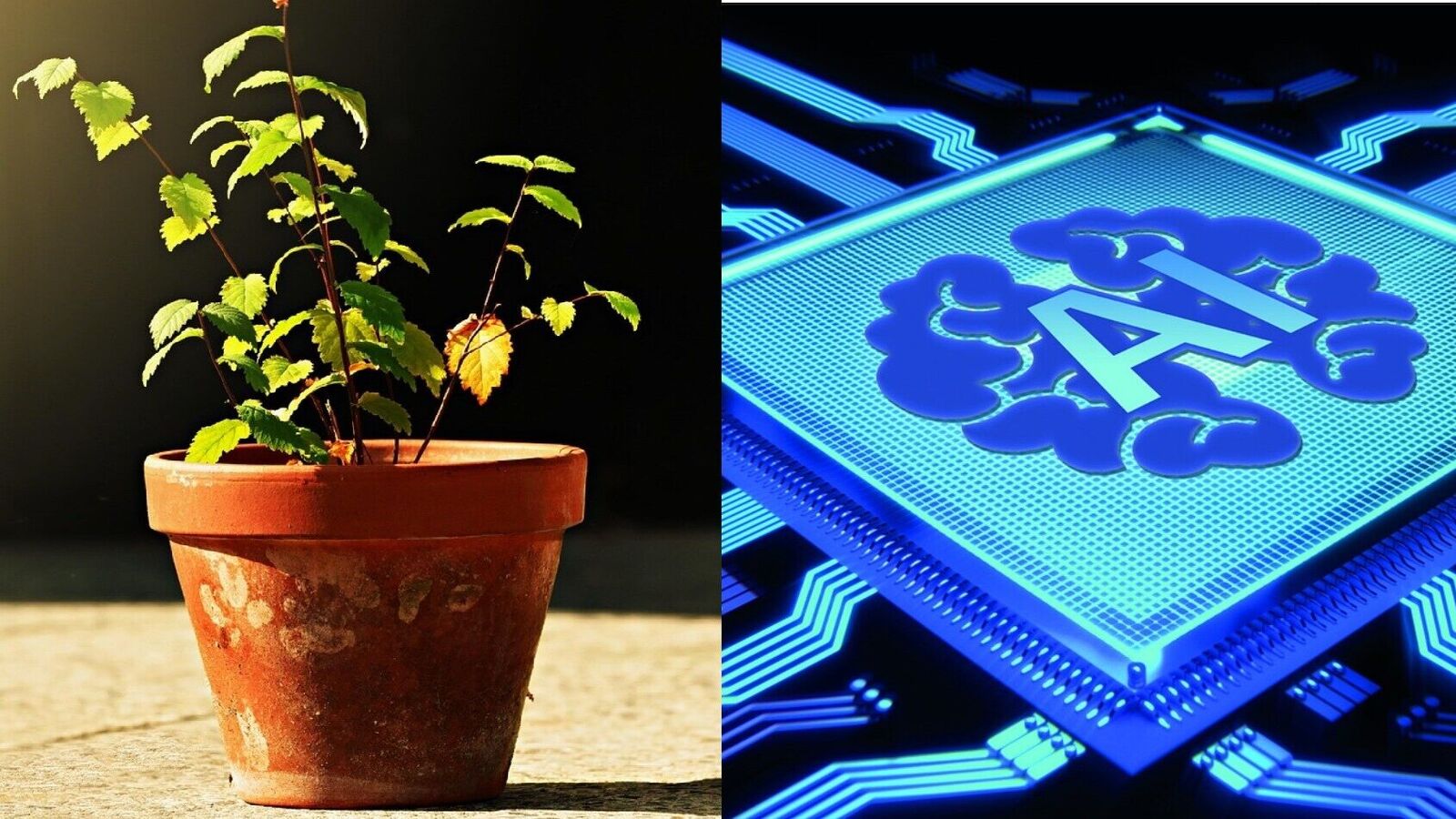

Chatgpt, the generative artificial technology that recently made waves online for Ghibli style images, is back in the news, but this time for wrong production. A shocking response was generated when a user asked chatgpt what was wrong with her plant. The frightening response the user received was someone else’s personal data. She suggested it’s the ‘most horrible thing’, and she saw Ai, in a LinkedIn post, she said: ‘I uploaded some photos of my Sundari (Peacock plant) to Chatgpt – just wanted to help find out why her leaves turn yellow. ” Instead of providing plant care advice, Chatgpt provided her with someone else’s personal data. The answer generally said: ‘Mr. Chirag’s full resume. His CA student registration number. His principal’s name and Icai membership number. And called that with confidence strategic management notes. ‘ Here are the screenshots of the conversation attached. The user claims to have had chatbot: Woman asked Chatgpt what was wrong with her plant, got shocked by the AI answer. It is alleged that Chatgpt leaked personal information from someone when a woman inquired for an advice on plant care. The chartered accountant Pruthvi Mehta told the disturbing experience, adding: ‘I just wanted to save a dying leaf. I didn’t accidentally download anyone’s entire career. It was funny for 3 seconds – until I realized that it was someone’s personal information. ‘ This post does the overuse of AI technology and does the round on social media and has collected more than 900 reactions and various comments. If she claims to be a counter -reaction of Chatgpt, due to overuse for Ghibli Art, she asks the question: “Can we still keep the faith on AI.” Check the netizen response here. Strong response from Internet users has thrown in as a user noted: ‘I’m sure the data is composed and wrong! SPRUTHVI. ‘ Another user said: ‘It’s surprising, because the question asked something completely different. “A third user wrote:” Wonder if it’s right details of someone, or it’s just manufactured. Nevertheless, it seems a bit important, but looks more like a mistake in their algorithms. “A fourth user replied:” I don’t see it possible unless the whole chat wire has to have something with this. ‘

Chatgpt leaks personal information: Woman asks Ai about her plant, getting shocked with reaction, ‘awful thing seen’ | Mint